Processing 100 Million messages per day – Confluent Kafka S3 Sink Connector – a Success Story

Processing 100 Million messages per day – Confluent Kafka S3 Sink Connector – a Success Story

Imagine a need to receive and store 100 million messages per day. That’s whopping 1200 messages per second from a third party. Our customer subscribed to a Data Supplier that would send 100 million messages per day through a secured Kafka connection. Besides, Kafka was installed in another cloud environment.

Please read further to learn how we established that massive processing capability.

About Our Customer and the need

Our customer offers comprehensive business intelligence solutions to help its customers meet diverse Business Intelligence needs. Our customer not only compiles the data but also structures data to its unique properties. The structure, association and domain knowledge is what arms the customer to provide unique insights from the data.

The customer requires data from its own customer and Orthogonal data from third parties. The orthogonal data such as weather or traffic information surely qualifies to be big data – volume, variety, velocity and veracity.

For one such Big Orthogonal data, the customer subscribed to a Data Supplier. The Data Supplier provided access to its own Kafka topic on its own cloud. The data flow was massive and occasionally there can be bad messages in the topic.

The customer contacted VisionFirst Technologies to develop end to end solution from message retrieval to storing in S3 bucket.

Technical Architecture

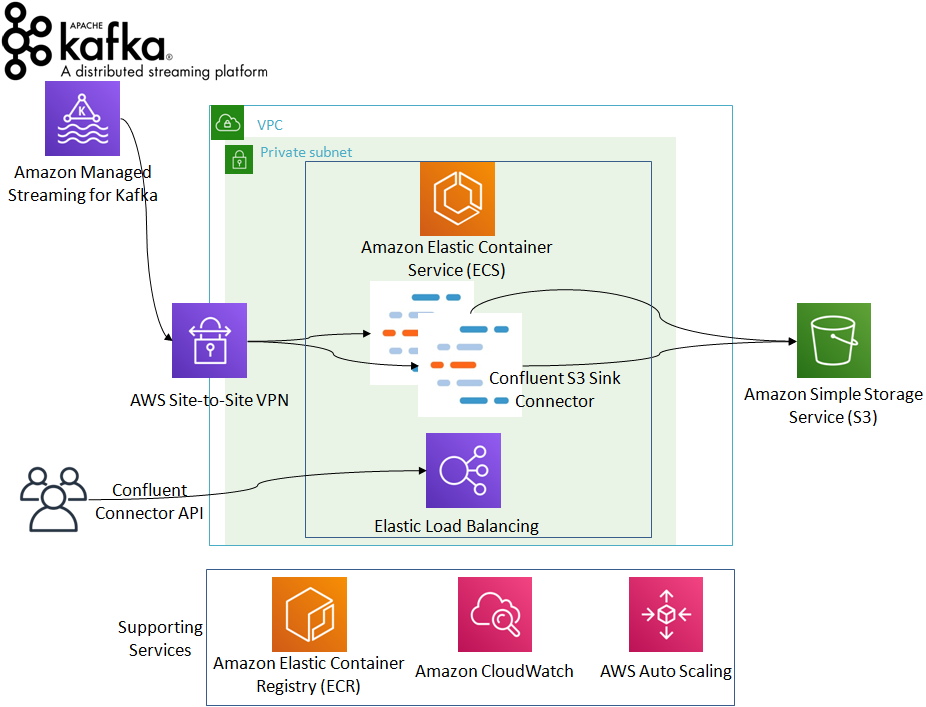

The network

We suggested establishing AWS site-to-site VPN from the Data Supplier to Customer VPC. Please refer to Practical Guide to creating AWS site to site VPN to learn how to establish site-to-site VPN connection.

Connector

We chose Confluent Kafka S3 Sink Connector which can process millions of messages if we can run these connectors in parallel.

In addition, we provided an endpoint exposed via Elastic Load Balancer to submit more jobs as required by the customer from time to time.

Auto Scaling and Fault Tolerance

We created docker image that wrapped Confluent Kafka S3 Sink Connector. The connector itself was made fault tolerant by minimal settings and if any error occurs during processing of data (bad message format) then it captured the error and put that message into another Kafka topic as Dead Letter Queue (DLQ).

The other failure that could occur was if connector fails while uploading data to S3. The Confluent Kafka S3 Sink Connector that we chose writes data to S3 as multi-part and would not commit into Kafka topic until S3 commit occurs; S3 commit is very fast after multi-part upload completes.

We deployed the image on ECS Fargate for serverless architecture. ECS provides auto-scaling out of the box. Thus, it creates more ec2 instances (scales-out) when more processing is required (based on CPU and RAM utilization) and reduces ec2 instances (scales-in) when load is low.

We created a Docker image that wrapped Confluent Kafka S3 Sink Connector and stored in ECR.

Destination of data

The connector would upload data in S3 using multi-part and connector was designed to resend message even when connector fails just before S3 commit occurs. We chose AWS S3 bucket for its industry-leading scalability, data availability, security, and performance.

Solution Benefits

The customer got the tool with which it can process more than 100 million data per day from Data Supplier to client S3 bucket in JSON. In fact, we tested the solution with 3 times the volume to ensure that the solution works even when volume grows massive (300 million messages per day).

About VisionFirst Technologies Pvt. Ltd.

We are a group of researchers and practitioners of cutting edge technology. We are AWS Registered Partner. Our tech stack includes Machine Learning, offline/2G tolerant mobile apps, web applications, IOT and Analytics. Please contact us to know how we may help you.